The actor critic dummy implementation is one of the most widely used reinforcement learning (RL) algorithms, combining the strengths of policy-based and value-based approaches. This article will help you understand what the Actor-Critic method is, why it is essential in RL, and how to implement a dummy version of it in simple terms that anyone with basic programming knowledge can follow.

What Is the Actor critic dummy implementation?

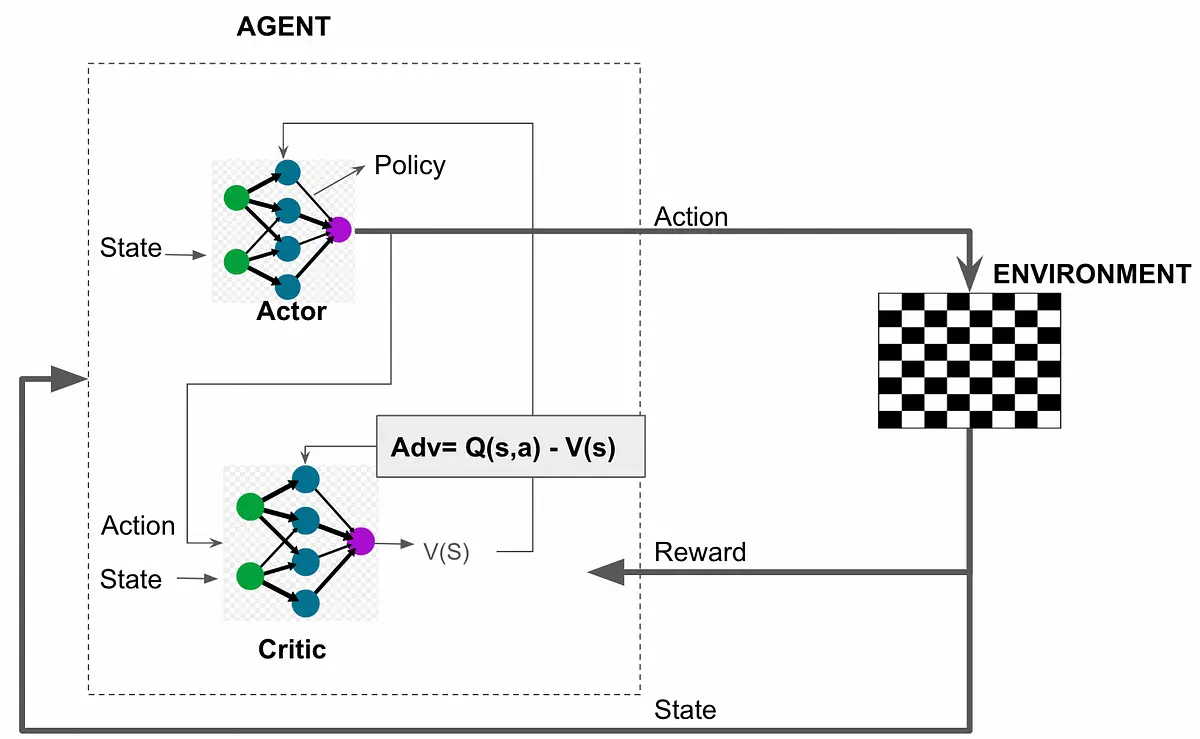

Reinforcement learning involves training an agent to make decisions by interacting with its environment to maximize cumulative rewards. In this paradigm, the Actor-Critic method is a hybrid approach that combines two key components:

- Actor: This component is responsible for choosing actions based on the policy (a function that maps states to actions). It determines what to do in a given state.

- Critic: This evaluates the actions taken by the actor by estimating the value function. It tells the actor how good its actions are.

Why Combine Actor and Critic?

The Actor-Critic method blends the benefits of two common approaches in RL:

- Policy-based methods (like the Actor): Directly optimize the policy but can have high variance.

- Value-based methods (like the Critic): Reduce variance but can be limited when approximating complex policies.

By combining these, the Actor-Critic method provides a balance between bias and variance, leading to more stable training.

Key Terms You Need to Know

Before diving into implementation, let’s go over a few important terms in RL:

- State (s): A representation of the current situation the agent is in.

- Action (a): A decision made by the agent, such as moving left or right.

- Reward (r): Feedback from the environment after taking an action, indicating how good or bad the action was.

- Policy (π): A strategy that defines how the agent behaves in each state.

- Value Function (V): An estimation of the expected cumulative reward starting from a given state.

Actor-Critic Algorithm Explained

The Actor-Critic algorithm works as follows:

- Initialize the Actor (policy) and Critic (value function) models.

- For each episode:

- Start from an initial state s0s_0s0.

- At each time step ttt:

- The Actor selects an action ata_tat based on the current state sts_tst.

- The environment provides a reward rtr_trt and a new state st+1s_{t+1}st+1.

- The Critic evaluates the Actor’s action by calculating the Temporal Difference (TD) error: δt=rt+γV(st+1)−V(st)\delta_t = r_t + \gamma V(s_{t+1}) – V(s_t)δt=rt+γV(st+1)−V(st) Here, γ\gammaγ is the discount factor that balances immediate and future rewards.

- Update the Actor using the TD error to refine the policy.

- Update the Critic to improve the value function.

- Repeat until the agent learns an optimal policy.

Dummy Implementation of Actor-Critic

Let’s break down the implementation of a basic actor critic dummy implementation algorithm using Python. This dummy implementation assumes you have basic knowledge of Python and libraries like NumPy.

Step 1: Import Required Libraries

pythonCopy codeimport numpy as np

Step 2: Define the Environment

We’ll create a simple environment with states and rewards.

pythonCopy codeclass DummyEnvironment:

def __init__(self):

self.state_space = 5 # Number of states

self.action_space = 3 # Number of actions

self.current_state = 0

def reset(self):

self.current_state = 0

return self.current_state

def step(self, action):

# Reward is randomly assigned for this dummy example

reward = np.random.choice([-1, 0, 1])

self.current_state = (self.current_state + 1) % self.state_space

return self.current_state, reward

Step 3: Initialize the Actor and Critic

For simplicity, we use tables to represent the Actor (policy) and Critic (value function).

pythonCopy codeclass ActorCritic:

def __init__(self, state_space, action_space, alpha=0.1, beta=0.1, gamma=0.9):

self.state_space = state_space

self.action_space = action_space

self.alpha = alpha # Learning rate for Actor

self.beta = beta # Learning rate for Critic

self.gamma = gamma # Discount factor

# Policy (Actor) initialized to uniform probabilities

self.policy = np.ones((state_space, action_space)) / action_space

# Value function (Critic) initialized to zeros

self.value_function = np.zeros(state_space)

def choose_action(self, state):

# Choose action based on policy probabilities

return np.random.choice(self.action_space, p=self.policy[state])

def update(self, state, action, reward, next_state):

# Calculate TD error

td_error = reward + self.gamma * self.value_function[next_state] - self.value_function[state]

# Update value function (Critic)

self.value_function[state] += self.beta * td_error

# Update policy (Actor)

self.policy[state, action] += self.alpha * td_error

# Normalize the policy to maintain probabilities

self.policy[state] /= np.sum(self.policy[state])

Step 4: Train the Agent

pythonCopy code# Initialize environment and agent

env = DummyEnvironment()

agent = ActorCritic(state_space=env.state_space, action_space=env.action_space)

# Training loop

episodes = 100

for episode in range(episodes):

state = env.reset()

for _ in range(10): # Limit to 10 steps per episode

action = agent.choose_action(state)

next_state, reward = env.step(action)

agent.update(state, action, reward, next_state)

state = next_state

print("Training completed.")

Observations from the Dummy Implementation

- Simplicity: This implementation is not optimized for real-world problems but serves as a foundation to understand the Actor-Critic framework.

- Scalability: Real-world applications use deep neural networks (e.g., Deep Actor-Critic) instead of tables for high-dimensional state and action spaces.

- Flexibility: The algorithm can be extended to more complex environments and tasks.

Next Steps and Advanced Learning

To further explore Actor-Critic methods:

- Deep Actor-Critic: Learn about implementations using frameworks like TensorFlow or PyTorch.

- Example: Proximal Policy Optimization (PPO), a popular advanced Actor-Critic algorithm.

- Continuous Action Spaces: Extend the dummy implementation to handle environments with continuous actions, such as in robotics.

- Exploration Strategies: Incorporate techniques like epsilon-greedy or entropy regularization to improve exploration.

Conclusion

The actor critic dummy implementation is a cornerstone of reinforcement learning, balancing the benefits of policy-based and value-based methods. This dummy implementation provides a stepping stone to understanding its mechanics and paves the way for tackling more complex RL challenges.

If you’re interested in learning more about reinforcement learning, consider exploring resources like the OpenAI Gym for hands-on practice or the Deep Reinforcement Learning Course by OpenAI.

By mastering the Actor-Critic method, you’ll be well-equipped to build intelligent agents capable of solving complex tasks in diverse domains. For more info please visit the networkustad.